PyTorch

1. Introduction

PyTorch is an open-source machine learning (ML) library developed by Facebook’s AI Research Lab (FAIR) in 2016. Designed for both research and production, PyTorch is especially popular in academia and the ML research community due to its ease of use, dynamic computation graph, and strong support for deep learning. Its flexibility and Pythonic design make PyTorch an accessible, versatile tool that supports a wide range of machine learning tasks, including neural networks, reinforcement learning, and natural language processing (NLP).

2. Key Features of PyTorch

Several features set PyTorch apart as a powerful choice for deep learning and machine learning projects:

- Dynamic Computation Graph: Unlike static computation graphs, which require a full structure upfront, PyTorch’s dynamic computation graph (also known as define-by-run) allows on-the-fly adjustments, making it more intuitive for debugging and experimentation.

- Extensive GPU Support: PyTorch offers seamless GPU acceleration, allowing users to switch between CPU and GPU processing with simple commands, speeding up computation-heavy tasks.

- Built-In Support for Tensors: Tensors, the core data structure in deep learning, are natively supported and optimized in PyTorch, making it efficient for handling large datasets.

- High-Level API for Neural Networks: PyTorch’s

torch.nnmodule offers high-level APIs for building neural network layers, simplifying the model-building process. - Interoperability with NumPy: PyTorch is highly compatible with NumPy, allowing for easy data manipulation and integration with Python’s scientific computing ecosystem.

3. Advantages of Using PyTorch

The design philosophy and architecture of PyTorch make it particularly advantageous for certain tasks and user groups:

- Research-Friendly: PyTorch’s dynamic graph structure is more intuitive and flexible, making it ideal for researchers who experiment with complex models and unconventional architectures.

- Ease of Debugging: Debugging is more straightforward in PyTorch because operations are executed immediately, allowing users to utilize Python debugging tools like

pdb. - Production-Ready with TorchServe: Developed by Facebook, TorchServe is a flexible tool for deploying PyTorch models in production, featuring easy-to-use APIs, scalability, and model management.

- Active Community and Ecosystem: As one of the most popular ML libraries, PyTorch has a strong community, extensive documentation, and a growing list of libraries and extensions, such as Hugging Face Transformers, which brings NLP and pretrained models to PyTorch.

4. Core Components of PyTorch

Understanding PyTorch’s core components is essential for effectively leveraging its capabilities. Here’s a breakdown:

a. PyTorch Tensors

Tensors are multi-dimensional arrays, similar to NumPy arrays, but optimized for GPUs. They are the fundamental building blocks in PyTorch, used for input data, model parameters, and intermediate computations.

b. Autograd Module

The autograd module is PyTorch’s automatic differentiation library, which tracks operations on tensors and calculates gradients, making backpropagation easy and efficient for training neural networks.

c. torch.nn Module

This module is a high-level abstraction for building neural networks. It contains various predefined layers, loss functions, and optimizers, making it easier to create complex architectures like convolutional and recurrent neural networks.

d. TorchScript

TorchScript bridges the gap between research and production by allowing developers to transform PyTorch code into a form that can be optimized and run independently from Python, making it more suitable for deployment.

e. TorchServe

Developed in collaboration with AWS, TorchServe is an open-source tool specifically for serving PyTorch models in production environments. It allows easy deployment, management, and scaling of models.

5. Getting Started with PyTorch

Installing PyTorch is straightforward with Python’s package manager. You can choose either CPU or GPU support:

# For CPU support

pip install torch

# For GPU support

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118Once installed, here’s a quick example of creating and training a simple neural network in PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

# Define a simple neural network

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(784, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return x

# Initialize the network, loss function, and optimizer

model = SimpleNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

# Dummy data

x_train = torch.randn(64, 784) # Batch of 64 images

y_train = torch.randint(0, 10, (64,)) # Labels

# Training loop

for epoch in range(5):

optimizer.zero_grad()

outputs = model(x_train)

loss = criterion(outputs, y_train)

loss.backward()

optimizer.step()

print(f"Epoch {epoch+1}, Loss: {loss.item()}")6. Popular Applications of PyTorch

PyTorch has gained popularity across a range of ML applications, from academia to industry. Here are some prominent use cases:

a. Natural Language Processing (NLP)

With libraries like Hugging Face’s Transformers, PyTorch has become a leading choice for NLP applications, including text classification, translation, sentiment analysis, and conversational AI.

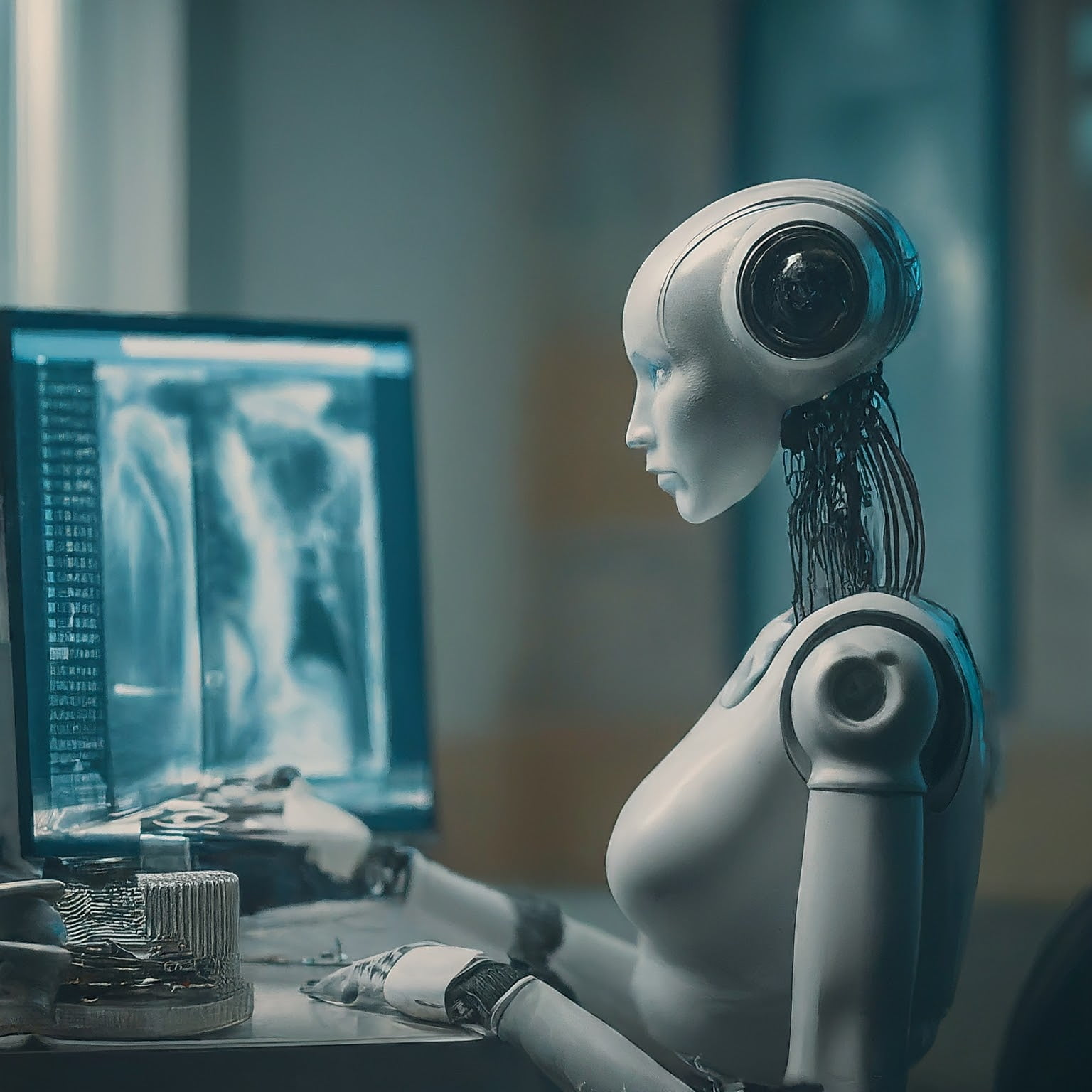

b. Computer Vision

PyTorch is widely used for computer vision tasks like object detection, image segmentation, and facial recognition. Libraries such as torchvision provide pre-trained models and utilities specifically for vision-based applications.

c. Reinforcement Learning (RL)

PyTorch’s flexibility with dynamic graphs makes it suitable for reinforcement learning. Researchers use PyTorch to build agents that learn optimal strategies for tasks like game playing, robotics, and automated trading.

d. Generative Models and GANs

PyTorch supports Generative Adversarial Networks (GANs), which generate new data based on learned data distributions. PyTorch’s simplicity and flexibility have led to its adoption in creative applications, including art generation, music synthesis, and text-to-image generation.

e. Healthcare and Biomedical Applications

PyTorch is used extensively in medical image analysis and genomics, where deep learning models assist in diagnosing conditions from X-rays, CT scans, and other medical images, or in identifying patterns within genetic data.

7. Conclusion

PyTorch has emerged as one of the most popular ML and deep learning libraries, thanks to its ease of use, flexibility, and powerful features for both researchers and practitioners. With support for everything from basic ML tasks to cutting-edge AI applications, PyTorch continues to grow and evolve, driven by an active open-source community and support from major industry players like Facebook and Microsoft. Its dynamic graph structure, compatibility with Python, and ecosystem of libraries make it a versatile, research-friendly, and production-ready choice for deep learning and machine learning.