DALL-E 3: A Complete Guide to OpenAI’s Latest AI Image Generator

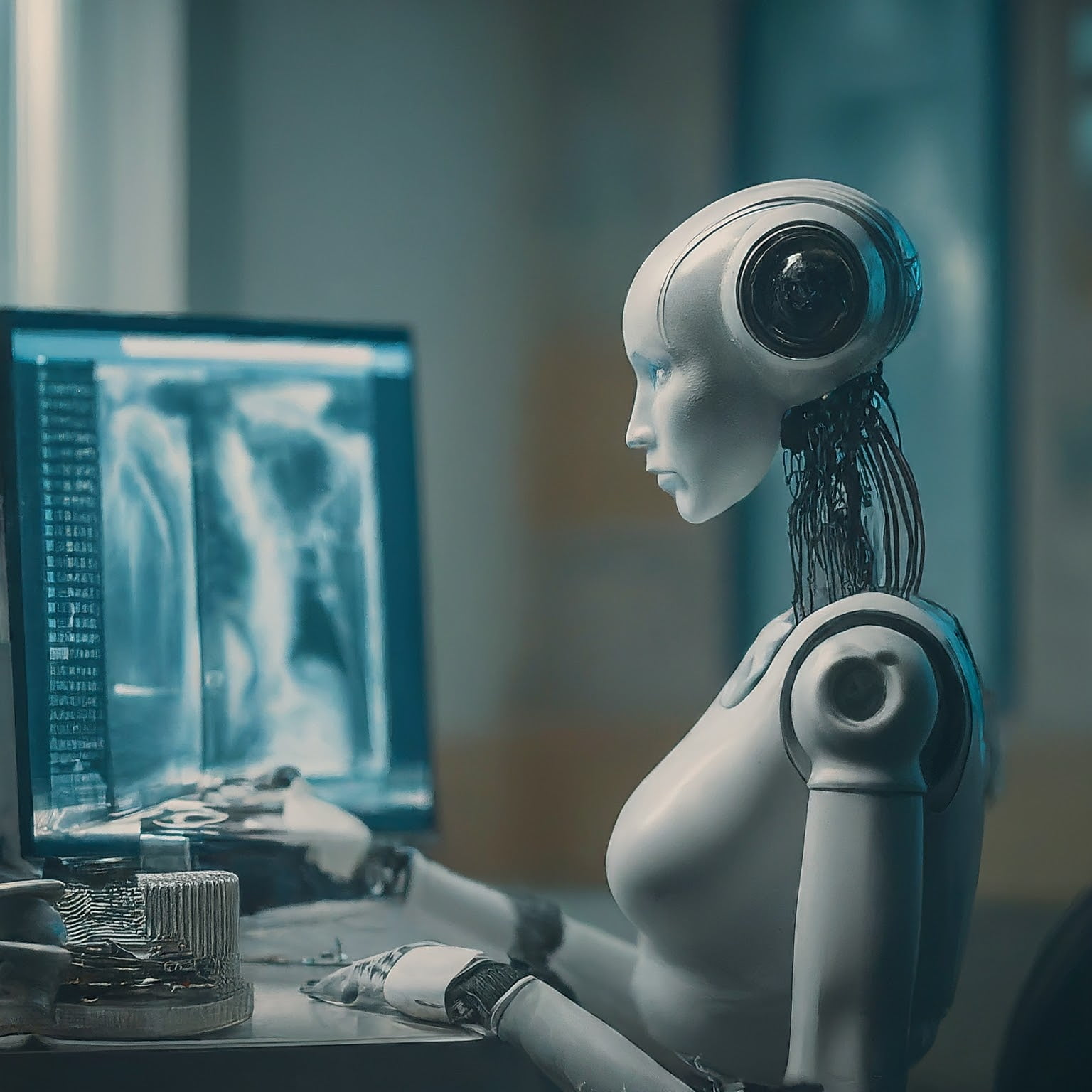

AI-generated images are advancing quickly, and DALL-E 3 is leading the charge. OpenAI’s latest model lets you create stunningly detailed visuals simply by describing them in text. With improved understanding of context, new styles, and high-definition options, it offers more control and precision than ever before. Whether you’re crafting visuals for work, art, or just exploring creativity, DALL-E 3 makes it easier to turn ideas into reality.

What is DALL-E 3?

DALL-E 3 is OpenAI’s latest text-to-image AI model, representing a significant stride in the world of artificial intelligence. By transforming written prompts into breathtakingly detailed visuals, it has set a new benchmark for creativity and image generation. But what makes DALL-E 3 truly stand out? In this section, we’ll explore its evolution and dive into how it crafts such precise and imaginative images.

The Evolution of DALL-E

DALL-E 3 emerges as the most advanced version of OpenAI’s image generators. Compared to DALL-E 2, it offers better image clarity, increased context understanding, and improved precision in rendering concepts from text. For instance, where DALL-E 2 might have struggled with interpreting ambiguous or complex prompts, DALL-E 3 excels by refining the way it processes these instructions.

Key differences include:

- Enhanced details: DALL-E 3 generates visuals with sharper and more accurate features, making images appear more realistic even when handling intricate requests.

- Stronger contextual comprehension: It understands nuanced prompts better, avoiding misinterpretations that could lead to irrelevant results.

- Reduction of errors: Issues like overlapping or inaccurate relationships between objects in an image have been significantly minimized.

To further grasp its advancements, OpenAI attributes part of DALL-E 3’s success to its integration with GPT-4 for prompt optimization and a reimagined text processing mechanism. More details on what sets DALL-E 3 apart can be found on OpenAI’s official DALL-E 3 page.

How DALL-E 3 Generates Images

The process behind DALL-E 3’s image creation revolves around a diffusion-based model. This technique systematically refines an image over multiple iterations, starting from random noise and progressing toward a fully formed visual representation.

Here’s how it works:

- Text Prompt Analysis: The model first processes the written input, breaking it down to understand objects, styles, and relationships.

- Image Initialization: Using a diffusion process, it generates an initial image from noise—a starting point that contains rough shapes and patterns.

- Iterative Refinement: Over a series of steps, it adjusts colors, shapes, and textures to align the output closer to the prompt’s desired vision.

This iterative refinement allows DALL-E 3 to maintain high accuracy in details while adapting its output to the user’s request. Think of it like sketching: initial strokes are broad and rough, but over time, the drawing gains definition and character.

For a practical guide on using DALL-E 3, check out this Datacamp tutorial covering its features in depth.

DALL-E 3’s combination of advanced text parsing and image generation processes isn’t just a technical upgrade—it fundamentally changes how people approach creativity. By blending intuitive user input with unparalleled output quality, it’s empowering artists, designers, and creators worldwide.

Key Features of DALL-E 3

DALL-E 3 represents a leap forward in AI-powered image generation. Built to take user creativity to the next level, it combines precision, quality, and accessibility, making it a standout tool for artists, marketers, and anyone seeking to transform ideas into visuals.

Improved Prompt Interpretation

Understanding text prompts has been a challenge for earlier AI models, but DALL-E 3 takes it to a new level of sophistication. Equipped with enhanced natural language processing, it deciphers complex and layered prompts with greater accuracy. For instance, when tasked with generating images featuring specific artistic styles, emotions, or intricate relationships between objects, DALL-E 3 performs with reliability.

This improvement is possible because of its integration with GPT-4, which re-structures prompts before passing them to the image generator. For more on how this works, you can refer to OpenAI’s breakdown of its prompt optimization approach. Whether your input involves nuanced human interactions or an abstract concept, DALL-E 3 ensures the result aligns closely with the vision.

Additionally, users no longer need extensive “prompt engineering” knowledge to get optimal results. It’s adept enough to handle casual instructions while still providing professional outcomes. This ease-of-use has earned it comparisons with tools like Stable Diffusion, but with clearer output for more complex inputs, as discussed in this analysis on Reddit.

Enhanced Image Quality

DALL-E 3’s ability to generate high-quality images is perhaps its most noticeable improvement. With more refined details, vibrant colors, and higher resolution, the images are polished and ready for use across platforms. Whether it’s creating realistic portraits, abstract illustrations, or stylistic designs, sharpness and detail are unmatched, even in images that require intricate elements like fine textures or precise lighting.

This enhancement is particularly valuable for commercial and content creators who rely on visuals to captivate audiences. Improvements aren’t limited to visual elements—color grading, depth, and the overall aesthetic feel have also been taken up a notch. According to a Microsoft guide on AI image improvements, this upgrade is crucial for its adoption in apps like Copilot and Bing.

However, it’s worth using the right platform to fully leverage these improvements. Some discrepancies in quality can exist depending on how DALL-E 3 is accessed. Detailed comparisons between API usage and integrations through apps like ChatGPT have been discussed in the OpenAI Community, which you can explore here.

User-Friendly Integration

Using DALL-E 3 isn’t limited to developers or tech experts. Its intuitive integration with platforms like ChatGPT and tools such as Bing Image Creator makes it accessible to almost anyone. Whether you’re chatting in ChatGPT or experimenting in Paint, generating images becomes seamless.

For example:

- ChatGPT: Users can describe the desired image directly in conversation prompts, and DALL-E 3 will generate them live.

- Bing Image Creator: With advanced settings and style options, users can instantly create graphics for blogs, social media, or presentations.

- Custom APIs: Developers can also integrate image generation capabilities into their apps by using the DALL-E API.

Platforms like Microsoft Copilot are leveraging DALL-E 3’s simplicity. This allows users to create and refine visuals with minimal effort, which is perfect for both beginners and professionals. For more details on its accessibility, take a look at this guide for simplifying integration.

Ultimately, DALL-E 3’s usability anchors its appeal. With hover-to-edit options, multiple style presets, and easy navigation, it bridges the gap between technical sophistication and everyday application. This not only ensures inclusivity but also expands its reach to a wider user base.

By improving interoperability and accessibility, DALL-E 3 empowers users to inject creativity across diverse domains without any steep learning curves.

DALL-E 3 vs Competitors

DALL-E 3 has made waves in the AI image generation market, but how does it fare against its competitors? To understand its positioning, we’ll explore comparisons with Midjourney, pricing strategies, and the strength of its community and support networks.

Comparison with Midjourney

Midjourney and DALL-E 3 are two of the most prominent players in text-to-image AI tools, offering unique strengths for their user bases. While both excel in generating high-quality images, their approach and user experience differ significantly.

User Control and Image Manipulation

Midjourney is prized for its level of control. Users can fine-tune settings, experiment with artistic styles, and receive multiple iterations for each image prompt. This customizability makes it ideal for experienced users such as designers and marketers looking for precise outputs. On the other hand, DALL-E 3 offers a simplified approach. It integrates seamlessly with tools like ChatGPT, making it highly accessible, but it lacks the granular control that Midjourney provides. If you’re seeking a balance between ease of use and creativity, DALL-E 3 might have an edge over Midjourney for general applications.

Flexibility and Contextual Understanding

Midjourney excels in detailed image quality and stylistic consistency, but DALL-E 3 sets itself apart with its contextual understanding. Thanks to integration with GPT-4, DALL-E 3 interprets prompts more effectively, especially when dealing with complex or abstract concepts. While Midjourney users may need longer prompts or iterative attempts for a nuanced output, DALL-E 3 achieves accurate results quickly, albeit with less customization.

For a deeper comparison, check out Midjourney vs. DALL·E 3 overview.

Pricing Differences

Pricing plays a crucial role when choosing an AI image generation tool, and DALL-E 3’s structure offers significant advantages, especially for casual users.

- DALL-E 3: Usage is often bundled within subscriptions to platforms like ChatGPT Plus, costing around $20/month. For those using the API, pricing starts at $0.04 per image for standard quality and $0.08 for HD images, making it scalable depending on your needs. OpenAI has also made DALL-E 3 available for free with limitations in certain applications, making it accessible to new users. More details are available on OpenAI’s pricing page.

- Midjourney: Subscriptions start from $10/month (basic plan) and go up to $60/month for unlimited and advanced features. While its pricing may appear higher, experienced users often justify the cost thanks to Midjourney’s extensive control and output quality.

Choosing between these two depends on how often you use these tools and for what purposes. For budget-conscious creators or those relying on integrations like ChatGPT, DALL-E 3 has a clear edge. However, professionals requiring consistency and control may lean toward Midjourney.

You can explore additional pricing insights from this Zapier blog on AI tools.

Community and Support

The strength of user communities and support can strongly impact the long-term usability of a tool. In this aspect, both platforms have their distinct offerings.

- DALL-E 3: Given its integration with popular platforms like ChatGPT and Bing Image Creator, DALL-E 3 benefits from active and supportive online communities. From prompt-writing tips to troubleshooting, users can interact on forums like the OpenAI Community. However, direct support from OpenAI is somewhat limited, with users occasionally reporting slower responses for technical issues.

- Midjourney: Midjourney thrives on community interaction. Their dedicated Discord server acts as a hub for sharing creations, seeking advice, and learning from other users. This hands-on approach fosters a collaborative ecosystem that’s appealing to creative professionals. However, support for technical queries often relies on peer interaction, with less emphasis on official channels.

Both tools benefit from passionate users who contribute to knowledge sharing and creativity. If community plays a big role in your workflow, Midjourney’s active engagement might offer more value, while DALL-E 3’s integration with OpenAI platforms makes it easier for casual users to find help quickly.

For tips and tricks to get started, you can browse discussions like the DALL-E 3 prompt tips thread.

Challenges and Limitations

While DALL-E 3 showcases impressive advancements in text-to-image AI, it also comes with its set of challenges. Understanding these limitations is essential for anyone relying on the platform for creative or commercial purposes. Below, we’ll dive into specific areas where DALL-E 3 users may run into roadblocks.

Prompt Limitations

One of the most significant hurdles with DALL-E 3 lies in its constraints around prompt input. The platform enforces restrictions on the length of prompts, which can impede creative expression when users want to provide more detailed instructions. For instance, prompts in DALL-E 3 via API are limited to 4000 characters, compared to earlier models like DALL-E 2 that allowed smaller inputs. This ensures faster processing but can frustrate users aiming for highly specific details. Source.

Additionally, some users report that the model modifies or interprets prompts differently than expected. This happens occasionally due to its built-in GPT-4-powered prompt optimization, which fine-tunes user input to make it “machine-readable.” While this can improve some results, it can also change the intended meaning, leading to outputs that feel off-target. For example, graphic designers attempting precise compositions might find themselves rephrasing or redoing entire prompts to achieve the desired look. You can read more about similar challenges here.

Image Consistency Issues

Though DALL-E 3 leads in generating high-quality visuals, consistency in image outputs remains a significant challenge. Users have often noticed inconsistencies in sharpness, exposure, and details when producing multiple variations of the same prompt. Some images may feature incorrect textures, mismatched colors, or subtle distortions that reduce their reliability for professional use. These issues are particularly evident when exploring complex or abstract designs, where the model can struggle to retain a cohesive style or theme across generations. Dive into detailed user observations here.

Moreover, the issue isn’t limited to aesthetics. Image quality can also degrade when creating outputs through specific platforms or integrations, such as Bing Image Creator or ChatGPT, rather than using DALL-E 3’s direct API. Smudged visuals or uneven resolution are frequent complaints in these cases, as highlighted in ongoing discussions within the OpenAI Community. For users looking to standardize their outputs, this variability poses a challenge.

Legal and Copyright Implications

The legal landscape surrounding AI-generated images is murky and evolving, especially when it comes to copyright protections. Since DALL-E 3 uses vast datasets for training, questions have been raised about whether the generated images could unintentionally replicate copyrighted material. Additionally, the AI itself doesn’t grant copyright to its outputs, as the generated works are often categorized as public domain under U.S. law. Learn more about this classification in this recent overview.

This creates challenges for businesses or creators who wish to use these images commercially. For instance:

- Trademark Limitations: While unique characters or logos can be trademarked, users must ensure their AI designs don’t closely resemble existing trademarks.

- Lawsuits and Liability Risks: Some courts have begun addressing cases where output images closely resemble copyrighted works from AI training datasets. Concerns about these overlaps are detailed here.

For anyone relying on DALL-E 3 to create marketing materials, products, or other commercial assets, it’s crucial to consult legal advice to avoid potential disputes. OpenAI includes these limitations in its terms of service, so users should stay informed to mitigate risks. Explore strategies for navigating these complexities in this practical guide here.

Understanding these challenges is key to maximizing DALL-E 3’s potential while avoiding pitfalls. Whether you’re an artist, developer, or marketer, knowing these limitations will help you make better choices when working with the tool.

Best Practices for Using DALL-E 3

DALL-E 3 offers a wealth of opportunity for creators, but using it optimally requires more than just typing a general description. By adopting thoughtful practices, you can unlock more precise, high-quality visuals. Below, we explore key strategies to maximize the potential of DALL-E 3.

Crafting Effective Prompts

The backbone of achieving great results with DALL-E 3 lies in your prompts. Think of a prompt as the blueprint for your final image—every word matters.

Here are some tips:

- Be Specific and Detailed: Describe what you want with clarity. Instead of saying “A forest,” try “A dense forest with tall pine trees and sunlight streaming through the canopy.”

- Style and Mood: Include artistic choices such as “modern,” “surreal,” or “vibrant.” For example, “A surreal neon cityscape, glowing under a full moon.”

- Perspective and Composition: If applicable, specify vantage points like “looking up,” “over-the-shoulder shot,” or “wide-angle.”

- Lighting and Time of Day: These subtle details shape the atmosphere. For example, “A desert at sunset with soft, golden lighting.”

- Mix and Layer Ideas: Complex descriptions can help deliver unique results. For instance, “A Renaissance painting-style portrait of a cat wearing medieval armor.”

For additional ideas, check out this discussion from the OpenAI community thread.

Iterative Refinement Strategies

DALL-E 3 works best when users embrace iteration. Rarely will the first output perfectly meet your expectations, but with incremental refinements, you can get closer to your vision.

Here’s how to refine prompts effectively:

- Make Small Adjustments: If the first output doesn’t quite work, tweak one element of your prompt. For example, adjust adjectives like “vivid” to “subtle” or rephrase unclear instructions.

- Highlight Desired Edits: Provide additional details for changes. For instance, if the image lacks dynamics, specify “add movement, like trees swaying or water rippling.”

- Experiment With Combinations: Layer different ideas across multiple iterations, such as combining “futuristic elements” with “ancient architecture.”

- Use Visual References: Include comparisons like “A castle similar to Hogwarts with steampunk elements.”

Not satisfied with your results? Try refining approaches discussed in this prompting guide on Medium.

Utilizing Integrated Platforms

DALL-E 3 is widely accessible through platforms such as ChatGPT and Bing Image Creator. These integrations simplify usage but require their own set of best practices for success.

Using ChatGPT with DALL-E 3

ChatGPT allows users to interact conversationally. To get better images:

- Iterate as a Dialogue: Think of ChatGPT as a collaborator. For example, reply with “Make the background brighter or add a vintage look.”

- Ask for Prompt Suggestions: ChatGPT can suggest phrasing to improve prompt clarity—especially helpful for creative newcomers.

Using Bing Image Creator

On Bing Image Creator, you will find a more visual-focused workflow:

- Explore Style Options: Experiment with pre-set filters such as “photorealistic” or “concept art.”

- Refine Through Regeneration: Engage multiple renderings of the same prompt to understand variable outputs.

For a comprehensive user experience walkthrough on both platforms, refer to DataCamp’s DALL-E 3 tutorial.

By following these practices, you’ll discover greater creative control and more compelling images. Whether you’re a first-time user or an experienced designer, every iteration builds your skill.

Conclusion

DALL-E 3 stands as a transformative tool for visual innovation with its ability to generate high-quality, detailed images from simple text. From empowering creative industries to enhancing educational tools and marketing strategies, its potential is undeniable. It not only simplifies complex design processes but also democratizes access to professional-grade visuals.

As AI like DALL-E 3 becomes part of daily workflows, it will likely expand capabilities, from better text integration within images to more refined creative controls. Whether you’re a designer, educator, or business professional, exploring its applications today can unlock new avenues for expression and efficiency.

What challenges or future advancements are you most excited to see? Let us know how you’d use DALL-E 3 in your projects.