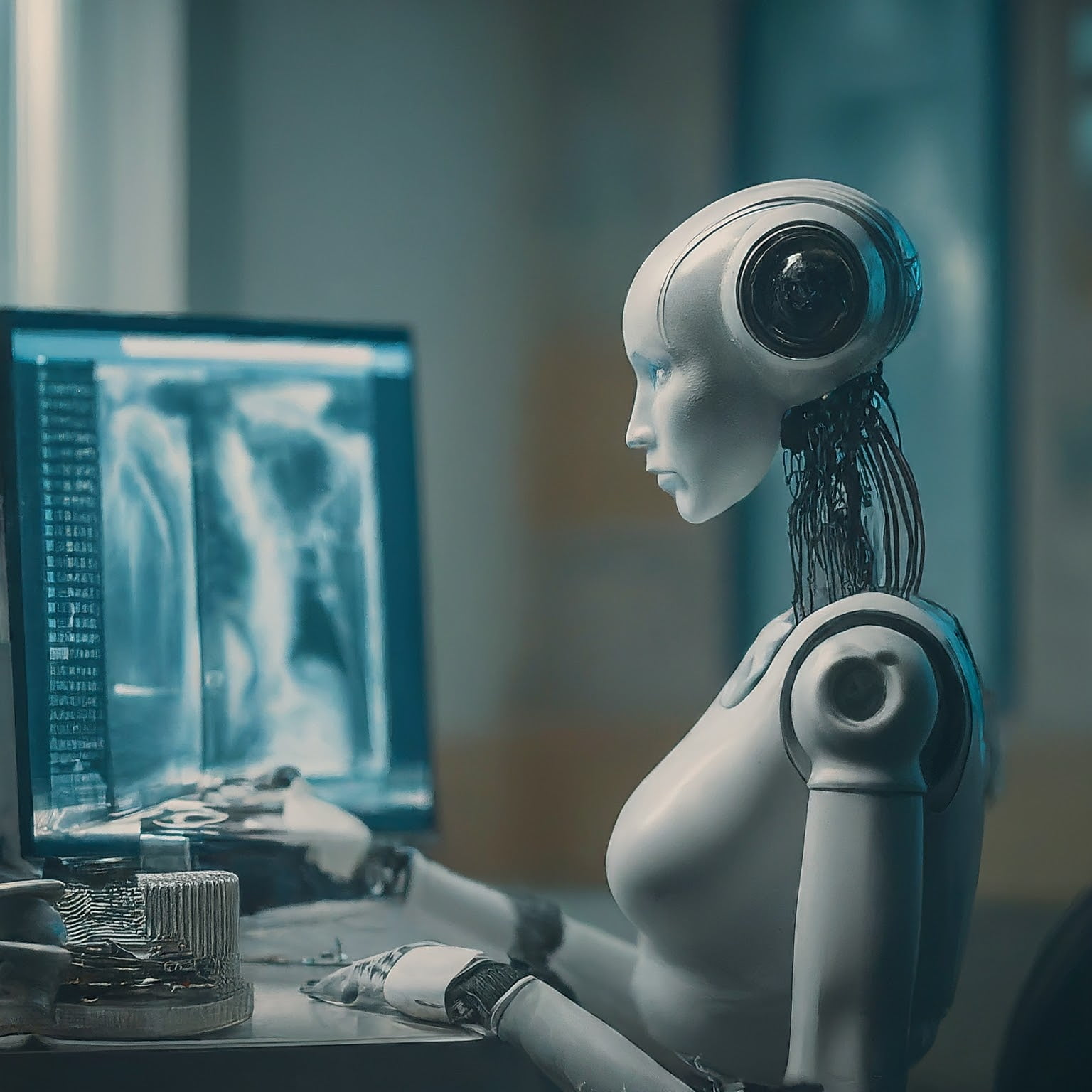

AI text to speech generated image

VQGAN+CLIP:

Imagine describing your wildest ideas and watching them turn into vivid, highly creative artwork within minutes. That’s exactly what VQGAN+CLIP does. By fusing two powerful AI models, it translates text prompts into stunning visuals, pushing the boundaries of what’s possible in digital creativity. This innovative tool has quickly become a favorite among artists, designers, and storytellers, opening up endless possibilities for generating unique, customized visuals. Whether you’re exploring AI art for fun or seeking new inspiration for your projects, VQGAN+CLIP is changing how we approach creativity.

How VQGAN+CLIP Works

VQGAN+CLIP is a fascinating blend of deep learning technologies designed to turn text prompts into visual art. The system marries two distinct AI models—VQGAN and CLIP—to offer an innovative approach to creative expression. Let’s explore how it all fits together and what makes this technology so compelling.

Understanding VQGAN and CLIP

VQGAN (Vector Quantized Generative Adversarial Network) is the engine behind the image generation process. It functions as the “artist,” creating an image based on the input data. Like any GAN, VQGAN comprises two neural networks—a generator and a discriminator—that compete with each other to produce realistic visuals. What sets VQGAN apart is its vector quantization, which ensures the generated images maintain high quality.

CLIP (Contrastive Language–Image Pretraining), on the other hand, plays the “critic.” Developed by OpenAI, it measures how well an image corresponds to a given text prompt. By ranking the alignment between text and image, CLIP continuously provides feedback to VQGAN, refining the output image through multiple iterations. This combination allows the system to generate visuals that match the input description as accurately as possible. Learn more about how VQGAN and CLIP work together.

The Text-to-Image Process

The process starts with a text prompt—this could be anything from “an abstract painting of the cosmos” to something as detailed as “a futuristic city skyline at sunset.” Once the user provides the prompt:

- VQGAN begins generating an image based on random patterns.

- CLIP steps in to evaluate the image against the text prompt.

- Feedback loops between CLIP and VQGAN, gradually refining the image with each iteration.

This iterative refinement ensures the final image aligns closely with the input text. Essentially, VQGAN+CLIP works like an artist and a critic collaborating, where the critic (CLIP) guides the artist (VQGAN) to create art that fits your vision. Check out this tutorial for more details on the text-to-image process.

Key Parameters for Generation

The quality of the generated image is highly dependent on specific parameters you choose. Here are the key ones:

- Seed Values: Think of this as a starting point for randomness. Using the same seed value ensures consistent results when repeating the process.

- Image Dimensions: Larger image sizes result in more detailed visuals but also require more computational power. Typical sizes range from 512×512 pixels to higher resolutions like 1024×1024 pixels.

- Iterations: This determines how many times the system loops to refine the image. More iterations often lead to better outputs but increase processing time.

- Prompt Text: Your text is the foundation of the entire process. Clear, descriptive prompts yield more satisfying results.

Each of these parameters can significantly influence the final artwork. Experimenting with them helps you fine-tune the system to match your creative needs. Learn how different parameters affect the output.

Hardware and Software Requirements

Running VQGAN+CLIP requires both proper hardware and software. Here’s what you’ll need:

- Hardware: A capable GPU is essential. A VRAM of 8GB is the minimum for medium-sized images, while larger images may require up to 24GB of VRAM.

- Cloud-Based Options: Don’t have a powerful GPU? Use platforms like Google Colab, which allow you to execute VQGAN+CLIP remotely without buying expensive hardware.

- Software: Python is the go-to programming language for this process. Libraries like PyTorch, NumPy, and PIL are required to set up the environment and run the scripts.

For those new to the setup, there are plenty of online resources and pre-configured scripts to fast-track the process. If you’re using cloud-based platforms, they often come pre-installed with necessary tools. Explore this resource to get started with hardware and software setups.

Let this guide serve as the foundation of what makes VQGAN+CLIP such a fascinating tool for modern creativity.

Popular Applications of VQGAN+CLIP Art

VQGAN+CLIP has captivated creators across various fields, offering limitless possibilities for artistic expression. Its ability to generate unique, eye-catching imagery has ushered in new ways to approach design, marketing, and even interactive art. Let’s explore a few of its most practical and exciting applications.

Concept Art and Design

In the creative industries, generating fresh ideas often feels like an uphill battle. This is where VQGAN+CLIP becomes an indispensable tool for concept artists and designers. By simply providing a text prompt, creators can generate visual concepts that fuel inspiration for:

- Storyboarding: Filmmakers and writers use it to generate visual storyboards, rapidly transforming abstract ideas into tangible frames to guide production.

- Product Design: From futuristic gadgets to innovative interior designs, the AI provides a broad range of iterations to help visualize initial concepts.

- Creative Direction: Artists can explore unique color combinations, patterns, and layouts for their projects, significantly speeding up the brainstorming process.

For example, an artist might input a phrase like “minimalist city skyline at dawn” and receive a starting point that sparks entirely new ideas. This effectively transforms the tedious sketching stage into something faster and far more dynamic. Learn more about how VQGAN+CLIP is being used in concept art.

Marketing and Branding

When it comes to standing out in the crowded world of branding, creativity is key. VQGAN+CLIP offers marketers a tool that generates entirely unique visual content, helping brands make a lasting impression. Here’s how it’s making waves in marketing:

- Custom Visuals: Designers can create bespoke graphics for social media posts, ensuring their campaigns feel authentic and engaging.

- Advertising Campaigns: From digital ads to billboard designs, VQGAN+CLIP allows marketers to experiment with bold, attention-grabbing visuals in minimal time.

- Brand Identity: Build distinctive imagery or patterns for logos, packaging, or websites that directly reflect brand values.

Brands can now push boundaries with visuals tailored perfectly for their target audience, breaking away from generic stock images. For instance, a travel company might use a text prompt like “serene mountaintop at sunset” to create campaign materials that instantly connect with potential customers. See how VQGAN+CLIP helps in generating marketing imagery.

Interactive Art Experiences

Interactive art is more accessible than ever, thanks to the fusion of AI and creativity. VQGAN+CLIP takes this concept further by enabling personalized, immersive projects such as:

- Art Installations: Museums and galleries can create installations where visitors input text prompts to generate real-time artwork, making the experience personal and participatory.

- NFT Creations: Artists use these tools to craft highly individualized, one-of-a-kind digital art pieces as non-fungible tokens, which are uniquely tied to blockchain technology.

- Gaming Environments: Developers are experimenting with this AI to design surreal and imaginative landscapes or props for interactive gaming worlds.

The technology invites audiences to co-create, blurring the lines between artist and viewer. Imagine an installation where your dreamlike descriptions come to life on a wall-sized screen—fascinating, right? Discover more about AI-powered interactive art.

VQGAN+CLIP is no longer just a model for creating static art; it’s helping expand the boundaries of real-time, customizable, and participatory experiences across industries. Whether for individuals or businesses, the possibilities continue to grow.

Pre-Trained Models and Style Transfer

The ability of VQGAN+CLIP to produce intricate and captivating visuals relies heavily on the pre-trained models and techniques available. These models and methods provide users with the flexibility to achieve distinctive artistic styles or fine-tuned outputs. Here’s how these tools work and how you can put them into action for your projects.

Overview of Pre-Trained Models

Pre-trained models help streamline the process by offering a foundation that has already been optimized. Some of the commonly used models include:

- imagenet_16384: Best for general-purpose artistic styles, this model comes pre-trained with a broad dataset, making it versatile for most text-to-image tasks.

- ade20k: Specialized in spatial and categorical understanding, it excels in architectural and natural scene compositions.

- ffhq (Flickr-Faces-HQ): Perfect for generating lifelike human faces while retaining artistic control.

For those exploring VQGAN+CLIP, these models act like pre-defined canvases tailored for specific requirements. Unsure what fits your concept? Start with imagenet_16384 for an all-around option, and then experiment to find the best fit. Learn more about pre-trained models and their uses.

Style Transfer Techniques

Want to make your generated art match your preferred style? Style transfer enables you to overlay the characteristics of an existing image onto your generated content. This process blends the “content” of one image with the “style” of another. Here’s how it works:

- Begin with a “content image” you wish to use as a base.

- Pick a “style image” that embodies the aesthetic you want (e.g., Van Gogh’s brushwork or minimalist line art).

- Use style transfer software or scripts to integrate both, resulting in an entirely new, combined piece.

With style transfer, even stock images can be transformed into striking visuals that mirror your desired artistic flair. It’s like mixing ingredients to create the perfect recipe—balanced and unique. Explore the magic of style transfer here.

Combining Prompts for Unique Outputs

Why stick with one idea when you can merge multiple? Combining text prompts—often called “prompt blending” or “story mode”—gives you the ability to guide the AI with more complexity. Here’s what you can do:

- Mix Styles: Combine “vivid watercolor” with “futuristic digital art” in a single prompt to produce something unpredictable and exciting.

- Create Narratives: Use sequential inputs like “a serene forest” followed by “shrouded in fog with mysterious glowing lights” to develop layered, story-like visuals.

- Prompt Mixing: Tools allow you to average the vectors of two prompts, giving seamless results (e.g., “a lion” and “Gothic cathedral”). See examples of mixed prompts in action.

Success with combining prompts often comes down to experimentation. Play around with different orders and weights for prompts, and you’ll find a blend that sparks creativity.

These techniques and tools open doors to infinite AI-generated visual possibilities, ensuring every piece you create feels personal and one-of-a-kind.

Tips for Optimizing VQGAN+CLIP Outputs

Getting the most out of VQGAN+CLIP requires a mix of creative thinking and technical adjustments. While the tool itself is a masterpiece, fine-tuning your prompts and parameters can significantly elevate the quality of your generated outputs. Here’s a guide to help you refine your results.

Choosing Effective Prompts

The text prompt you provide is the backbone of your entire creation. Crafting a clear and vivid prompt can make all the difference in the image quality. But how do you write an effective one?

- Be Specific: Replace vague descriptions like “beautiful painting” with more detailed ones such as “a surrealist painting of a blue forest under a glowing moon.” Specificity provides a stronger anchor for the model to work from.

- Incorporate Styles: Include mentions of artistic styles or influences. For example, “a steampunk cityscape in the style of Van Gogh” blends architectural themes with an iconic art style.

- Experiment with Modifiers: Try adding words like “highly detailed,” “minimalist,” or “vibrant colors” to influence the aesthetic. This can guide the AI to lean towards certain characteristics in your output visual.

If you’re just starting out, trial and error are part of the learning curve. Play around until you find the balance! Check out more tips for crafting impactful prompts.

Fine-Tuning Advanced Parameters

Once your prompt is ready, optimization doesn’t stop there. Adjusting advanced settings can enhance the output even further. Here’s where tweaking comes in:

- Learning Rate: A lower learning rate ensures finer adjustments during optimization loops. Start small, around 0.1 to 0.2, and observe the impact on detail levels and coherence.

- Optimizers: Adam Optimizer often works well, but consider experimenting with RAdam or others for smoother convergence. Each optimizer has its quirks, and the results may vary based on your prompt and settings.

- Augmentation Techniques: Use data augmentation to prevent overfitting. Techniques such as random cropping or color jittering can introduce variability, improving overall creativity and detail in the final result.

These parameters can feel overwhelming at first, but small tweaks often lead to surprising improvements. For a deeper dive, explore how advanced parameters affect outputs.

Troubleshooting Common Errors

Despite its capabilities, using VQGAN+CLIP isn’t without hiccups. Here’s how to tackle some common issues:

- CUDA Out-of-Memory Errors: If you encounter these, try lowering the image resolution or batch size. Running on a GPU with higher VRAM, such as 16GB or more, can also mitigate this.

- Hardware Compatibility Challenges: Not every system is built for heavy machine learning tasks. Consider using platforms like Google Colab to offload computation to cloud GPUs.

- Error in Library Installation: Python environments can be tricky. Ensure dependencies like PyTorch and torchvision are installed using the specific versions recommended in tutorials. This guide can help resolve installation obstacles.

These solutions will resolve most issues you face. If problems persist, communities like Reddit’s r/deepdream or GitHub discussions are goldmines for troubleshooting advice.

With these tips, you’re well on your way to mastering VQGAN+CLIP. Perfecting your output is a journey, not a sprint, so don’t hesitate to experiment and explore what this incredible tool has to offer!

Ethical Considerations in Using AI for Art

The rise of AI in art has sparked intense discussions about its ethical implications. From debates about originality to its societal impact, there are complex considerations for artists, developers, and users alike. Let’s examine some of the most pressing issues.

Authorship and Originality

When AI generates art, who gets the credit? Authorship is a central issue when discussing ethical considerations in AI art. Unlike traditional art, where a human artist’s creative vision is evident, AI-produced works rely on pre-trained datasets and algorithms. These systems often pull inspiration from existing works, raising questions about originality and intellectual property.

For instance, tools like VQGAN+CLIP might remix elements from millions of art pieces found in the datasets they were trained on. But if the AI is trained on copyrighted materials, is it unintentionally copying? Some argue that this undermines traditional copyright protections. In fact, current U.S. copyright law typically requires a “human author” for a work to be eligible for protection. AI outputs, while innovative, often do not meet this standard. Dive deeper into authorship and copyright challenges in AI art.

Beyond legalities, there’s a philosophical question: Does AI art have true originality? Or is it merely an amalgamation of what’s already been created? These debates encourage us to think carefully about how we give credit to AI-driven creativity.

Impact on Traditional Artists

The rise of AI-generated art has left many traditional artists concerned about their future. Is AI a competitor or a collaborator? For many, it feels like a double-edged sword.

On one side, tools like VQGAN+CLIP democratize art creation, making it accessible to those without traditional training. For hobbyists and marketers, AI art opens doors previously closed. However, this accessibility can lead to decreased demand for traditional art commissions. Why hire an artist when software can create something similar—and often faster? Explore more about the effects of AI art on traditional artists.

At the same time, established artists worry about their work being fed into algorithms without consent. Imagine creating an original painting, only to discover your style has been replicated by an AI model trained on your portfolio. Such practices spark debates about compensation, credit, and consent.

While AI won’t replace all artists, striking a balance is crucial. Respecting traditional art forms, compensating creators fairly, and recognizing the value of human touch are essential.

Bias in Pre-Trained Models

AI models are only as unbiased as the data they are trained on. Unfortunately, many datasets come with inherent biases—both cultural and systemic—that can inadvertently shape the art these models create.

For example, biases in gender, ethnicity, or cultural imagery may emerge when algorithms produce art reflecting the priorities of the dataset’s creators or their sources. Large AI models like Stable Diffusion and others have faced criticism for amplifying stereotypes. For instance, a model trained on Western-centric art may disproportionately highlight certain cultural aesthetics while underrepresenting others. Learn about how bias impacts AI art.

Additionally, this raises questions about whose perspectives are being presented. If models reinforce existing prejudices, are they stifling the diversity and inclusivity we aim for in art? It’s a challenge that developers and users must address together, ensuring that technology reflects the broad spectrum of human expression.

Correcting these biases requires critical intervention. Better curation of training datasets, increased transparency, and ongoing audits can help reduce harm and promote fairness in AI-generated creativity.

Getting Started with VQGAN+CLIP

Getting started with VQGAN+CLIP may seem daunting, but with some guidance, even beginners can create jaw-dropping AI-generated visuals. Let’s break the process into three manageable steps: setting up your environment, generating your first artwork, and exploring advanced features.

Setting Up Your Environment

The key to success with VQGAN+CLIP starts with a proper setup. You can choose between running everything on a local machine or leveraging platforms like Google Colab for easier access to resources.

Here’s how to set up your environment:

- Google Colab Setup:

- Access Colab Notebook: Start with a notebook pre-configured for VQGAN+CLIP. One good option is this resource on GitHub.

- Copy to Google Drive: Save this notebook to your Google Drive so you can edit and save your progress.

- Connect to GPU Runtime: Go to “Runtime” in Colab, select “Change runtime type,” and set the hardware accelerator to GPU for smoother performance.

- Local Installation (if you prefer working offline):

- Install Python and essential libraries like PyTorch, NumPy, and matplotlib.

- Download pre-trained models, such as

imagenet_16384, which you’ll need for generating images. Follow guides like this one for detailed instructions. - Check your GPU compatibility, as local generation requires a system with powerful VRAM (ideally 8GB or more).

Whether you’re using Colab or your local setup, take the time to ensure dependencies are resolved. A properly configured environment can save hours of troubleshooting later on.

Running Your First Prompt

Ready to see VQGAN+CLIP in action? Here’s a basic walkthrough:

- Choose Your Text Prompt: Start simple. For example, “a surreal landscape of glowing mountains under a starry sky.”

- Set Parameters:

- Define image dimensions (e.g., 512×512).

- Select iterations (higher numbers yield more refined outputs; try 200-300 for starters).

- Input your text prompt and adjust settings like learning rate or optimizer.

- Run the Code:

- In Google Colab, execute each block of code step by step. Wait for the AI to generate your image based on the feedback loops between the VQGAN and CLIP models.

Once finished, your generated image will appear. Feel free to tweak the prompt or parameters and rerun for a variety of similar outputs. If you’re curious about detailed instructions, this starter guide will walk you through the process.

Exploring Advanced Features

As you get comfortable, dive into more advanced features like video generation and multi-prompt storytelling. These tools allow you to expand your creative possibilities:

- Video Generation:

- Tools like those provided in this repository let you generate videos by interpolating between prompts or evolving a single prompt over time.

- Try blending “a sunny meadow” into “a stormy forest” frame by frame for a dynamic, animated effect.

- Multi-Prompt Storytelling:

- Combine multiple text prompts to tell a visual story. For example:

- Step 1: “An ancient castle covered in vines.”

- Step 2: “The castle dissolving into a field of flowers.”

- Step 3: “The field of flowers transforming into a glowing galaxy.”

- Experiment with weight parameters to determine how much influence each prompt has on the output. This method results in layered, narrative-driven visuals.

- Combine multiple text prompts to tell a visual story. For example:

Exploring these features becomes even more powerful as you get comfortable with the tool. Experimentation is key, and plenty of resources, like this tutorial, can guide you further.

Now that you know how to set up and experiment with your first creations, the next steps can help you push boundaries even further. The platform offers a blend of technical flexibility and creative freedom, making it one of the most exciting tools for AI art enthusiasts.

Conclusion

VQGAN+CLIP is reshaping how we think about art and creativity. By merging advanced AI technology with artistic input, it opens up endless avenues for generating unique visuals. Artists and creators can experiment with different prompts, styles, and parameters, easily transforming their ideas into stunning imagery.

This tool not only accelerates the creative process but also invites users to explore their artistic horizons. Whether you are a seasoned artist or a curious beginner, now is the time to play around with VQGAN+CLIP. What ideas will you bring to life?

Embrace the limitless potential this technology offers and see where your imagination can take you. Thank you for exploring this exciting journey into AI-generated art!