5 AI Mistakes And How to Fix Them

AI tools are transforming how we work, create, and solve problems, but they’re not foolproof. Many users stumble into common pitfalls—like relying too heavily on AI or skipping the step of fine-tuning results—which can lead to missed opportunities or inaccurate outcomes. The good news? These mistakes are preventable with a bit of strategy and understanding. In this post, we’ll highlight the key missteps and, more importantly, share how you can avoid them to get the most out of your AI tools.

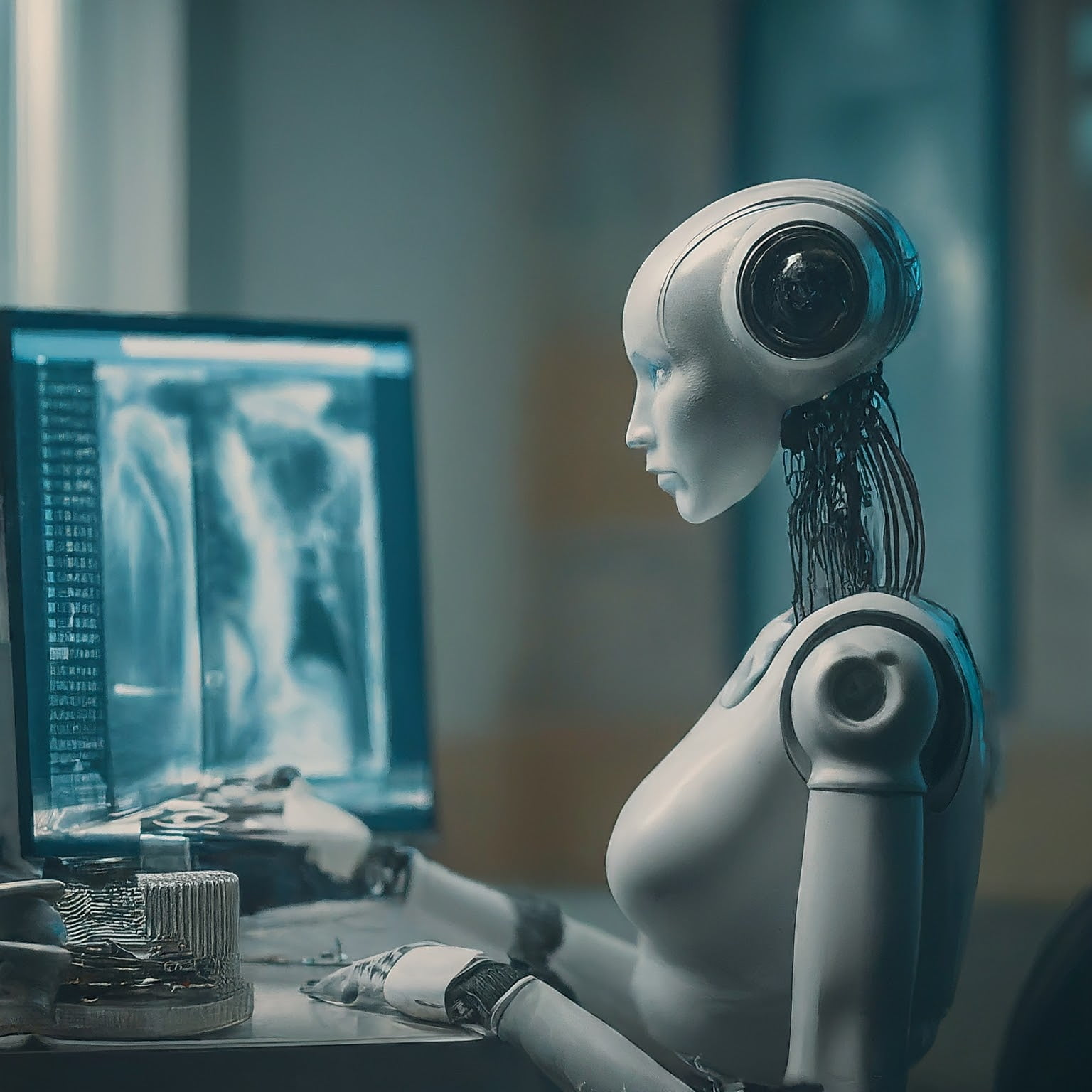

Over-Reliance on AI Tools

AI tools can be incredibly powerful, but depending on them too much can create challenges that are hard to ignore. While they offer convenience and efficiency, over-reliance can impact judgment, creativity, and accountability. Let’s break down some of the common issues and how you can maintain a healthy balance between AI and human decision-making.

Challenges of Dependency on AI

AI is designed to process data and generate insights, but it lacks qualities like empathy, intuition, and adaptability to nuanced situations. Over-relying on AI tools can negatively affect both outcomes and workflows.

- Misinterpretation of Context: AI systems analyze patterns and trends, but they don’t understand the deeper context or intent behind a task. For example, an AI-powered hiring tool might filter candidates based solely on keywords, potentially overlooking individuals who are a perfect cultural fit or have transferable skills.

- Lack of Human Empathy: In customer service or sensitive communications, AI-generated responses can feel robotic and tone-deaf. While chatbots can resolve simple issues, they often fail to address emotional nuances, leaving users frustrated or misunderstood.

- Increased Risk in Decision-Making: Automation bias occurs when people blindly trust AI-generated suggestions without questioning their accuracy. Imagine a financial advisor relying entirely on AI to predict market trends without verifying the data, leading to costly errors.

- Loss of Skills Over Time: The more we rely on machines to think for us, the less we exercise our own critical thinking, problem-solving, or creativity. This “use it or lose it” principle becomes a real concern when AI starts doing tasks traditionally handled by humans.

These problems reveal why dependency on AI should be monitored rather than allowed to replace human oversight altogether.

Balancing AI with Human Input

AI works best as a tool, not a standalone decision-maker. To get the most value out of your systems without losing your human touch, here are some practical strategies:

- Always Review AI-Generated Outputs: Treat AI suggestions as recommendations, not absolutes. Whether you’re reviewing marketing copy or analyzing customer data, it’s crucial to fact-check and refine the outputs to align with your goals.

- Prioritize Human Oversight on Critical Tasks: For decisions involving ethics, empathy, or creativity—such as crafting a legal argument or resolving a dispute—humans should remain at the helm. AI is great for insights, but it should never steer the ship completely.

- Use AI as an Assistant, Not a Replacement: Instead of delegating entire workflows, let AI handle repetitive, low-value tasks while you focus on strategy and decision-making. For example, use AI to generate data reports but interpret their implications yourself.

- Encourage Collaboration Between Humans and AI: Think of AI as an extra teammate. Allow it to handle the grunt work while your team brings the strategy, problem-solving, and interpersonal skills needed to oversee and fine-tune outputs.

- Train Your Team on AI’s Strengths and Weaknesses: Educate your team on what the tool can and can’t do. This helps set realistic expectations and ensures that employees know when to step in if something doesn’t seem right.

Striking the right balance is like using GPS. It’s okay to let the system guide you, but make sure to pay attention to the road signs along the way. Don’t let AI lead unchecked—it should work with you, not substitute your judgment.

Ignoring Proper Data Preparation

When it comes to leveraging AI tools, the data you feed into the system dictates the quality of the results. Think of AI like cooking: even the best chef can’t prepare a gourmet meal with bad ingredients. Yet, one of the most common missteps people make is ignoring proper data preparation. This oversight not only undermines the usefulness of AI but can lead to errors, inefficiencies, and outright failures. Let’s explore why data preparation matters and how to get it right.

Impact of Poor Data Quality

AI thrives on high-quality, well-structured data. When that’s missing, the results can be disappointing—or even harmful. Here’s what can happen when data preparation is treated like an afterthought:

- Biased Outputs: Poor data quality often introduces biases. For instance, in 2018, a global retailer using AI for hiring scrapped their tool after discovering it downgraded female candidates due to historical data favoring men. The data accidentally reinforced gender bias because it wasn’t properly neutralized in preparation.

- Inaccurate Predictions: AI models trained on incomplete or messy data may fail to capture patterns, leading to misguided decisions. For example, an AI forecasting tool might wrongly predict high stock demand if historical data is riddled with gaps or outdated information.

- Inefficiency: Dirty data wastes time and money. When customers of a financial firm faced delayed loan approvals, a closer look revealed the issue: the AI underwriting system was bogged down by inconsistent entries in applicant records. The inefficiencies rippled across business operations, frustrating both staff and clients.

These examples show that ignoring data preparation is not just a technical glitch—it’s a business risk.

Best Practices for Data Management

The good news? These kinds of mistakes are preventable. You don’t need to be a data scientist to implement solid data preparation practices. Here are some practical tips anyone can follow:

- Ensure Data Completeness: Any missing values in your data can cripple insights. Audit your datasets to ensure they have relevant and comprehensive information for the task at hand.

- Fix Inconsistencies: Inconsistent formatting—like varying spellings of the same term—throws AI tools off track. Use standard labels, units, and schemas to keep datasets clean and aligned.

- Eliminate Redundant or Irrelevant Data: Not all data is useful. Remove data entries that add noise rather than context. For instance, avoid training a customer service bot with irrelevant data from unrelated departments.

- Focus on Tagged and Labeled Data: Whether you’re training an AI model for image recognition or natural language tasks, detailed labels and tags improve accuracy. Imagine an AI learning to detect emotions in photos—if photos aren’t correctly tagged (e.g., “happy,” “sad,” “neutral”), the tool can’t learn properly.

- Regular Data Maintenance: Data preparation isn’t a one-and-done process. Real-world data evolves over time. Build a routine for ongoing checks so your data remains relevant and useful as conditions change.

- Use Automation Tools: Tools designed for data cleaning and validation can simplify preparation. They automatically detect duplicates, gaps, and outliers, saving you time.

Preparing your data might feel tedious at first, but it’s a proven way to get better, more reliable results from your AI tools. Without it, you’re essentially trying to drive on a road filled with potholes—it’s bumpy, slow, and likely to lead nowhere.

Overlooking Customization

Many users approach AI tools as plug-and-play solutions, expecting them to deliver perfect results right out of the box. But here’s the truth: ignoring customization is like wearing one-size-fits-all clothing—it might work in theory, but in practice, it rarely fits. Customization ensures AI tools meet your unique needs, enhancing their usability, accuracy, and impact. If you skip this step, you’re likely leaving value on the table.

Why Customization Matters

By tailoring AI tools to your specific goals, you can dramatically enhance relevance, efficiency, and overall performance. A generic setup can only take you so far because it’s designed for broad applications, not your individual context. Let’s look at how customization plays a role across different industries:

- Retail: Imagine a retailer using AI for product recommendations. Without customizing the recommendation engine, it might suggest generic items that don’t resonate with shoppers. When tailored to user preferences—based on browsing history, purchase patterns, and seasonal trends—the tool can recommend products that feel personal, boosting sales and customer loyalty.

- Healthcare: In healthcare, AI tools are used to predict patient outcomes or flag potential issues. If a hospital uses an uncustomized algorithm built for general use, it might miss critical nuances in regional demographics or specific disease trends. Adapting the system to focus on local population data and organizational workflow makes its insights far more actionable.

- Education: Schools implementing AI-driven learning platforms often face challenges when they fail to modify content to fit their curriculum. A student in chemistry shouldn’t get lesson recommendations meant for biology. Personalized adjustments, such as aligning the tool with grade levels and syllabus topics, turn AI into a true teaching aid.

Skipping customization often leads to underperformance. It’s harder for a tool to hit the mark when it hasn’t been tuned for the task at hand.

Steps to Customize Effectively

Customizing AI tools doesn’t have to be overwhelming. Most tools are now designed to support tweaking and integration, making it easier for non-specialists to see results. Here’s how you can get started:

- Modify Settings for Specific Goals

Almost all AI tools allow users to adjust settings or input parameters. Make sure these align with your objectives. For instance, in marketing tools, you can tweak audience parameters to better target campaigns or refine ad copy outputs based on brand tone. - Integrate APIs for Better Functionality

APIs (Application Programming Interfaces) let you connect your AI tool to other software you use. Let’s say you manage inventory—integrating sales data APIs ensures that your AI tool accounts for live stock levels when making demand predictions. - Train the Tool with Relevant Data

Feeding your AI model high-quality, context-specific data helps train it for your needs. For a customer service chatbot, uploading industry-specific FAQs is a must to ensure accurate, relevant answers. - Refine AI Outputs Based on Feedback

Customization doesn’t end with initial setup. Review the tool’s outputs regularly and adjust. For example, if you’re using a content generator for blog posts, you can fine-tune prompts to achieve the right tone and focus. - Loop in Your Team for Insights

Your team knows the nuances of the tasks AI is supporting. Tap into that expertise when customizing. They can help identify where the tool needs more specificity or which outputs need improvement. - Experiment and Iteratively Improve

Don’t be afraid to test different configurations. Try running A/B tests on outputs and comparing the performance. Over time, you’ll learn what settings and integrations work best.

Customization helps AI tools work smarter, not harder. Think of it as a partnership between the tool and your goals—when you meet it halfway, the results can be transformative.

Failing to Monitor and Test AI Outputs Regularly

AI tools may seem like set-it-and-forget-it solutions, but skipping regular monitoring and testing is a recipe for trouble. Like leaving a car running on autopilot, failing to check in can lead to serious mistakes that snowball over time. When AI outputs are left unchecked, biases, errors, and inefficiencies can quietly creep in, undermining the tool’s purpose and creating issues you never saw coming.

Common Pitfalls of Inadequate Monitoring

Neglecting to monitor and evaluate AI outputs regularly can lead to problems that aren’t just inconvenient—they can be damaging to your business, workflow, or customer trust. Here are some of the most common risks:

- Unchecked Biases: AI tools don’t think; they learn from data. If that data reflects existing biases, the AI will replicate and even amplify them. For instance, a recruitment AI might unknowingly favor one demographic if the training data skews in that direction. Without monitoring, such biases go unnoticed until they cause significant damage.

- Data Mismatches: AI systems are built to work within specific contexts. If the input data changes—like shifting customer behaviors or new market trends—the AI may generate irrelevant or outright incorrect results. This mismatch can lead to poor decision-making, like offering outdated recommendations to customers.

- Systemic Errors Over Time: Small, unchecked errors can cascade into larger problems. For example, an AI predictions tool that incorrectly forecasts demand for a product might lead to major supply chain disruptions. Once these errors are baked into processes, untangling the mess becomes exponentially harder.

- Loss of Accuracy: AI models can deteriorate over time. Known as “model drift,” this occurs when the relationship between what the model was trained on and real-world data shifts. A customer behavior AI, for instance, might falter as societal trends change, producing less reliable insights unless retrained.

- Legal and Ethical Risks: If sensitive data handling or decision-making biases go undetected, your company might face compliance issues or reputational harm. Imagine using AI for financial services and failing to notice patterns in its decisions that unintentionally discriminate against some applicants.

These are not rare occurrences but rather predictable scenarios in the absence of robust oversight. Ignoring these risks amounts to inviting trouble.

Strategies for Effective Testing and Monitoring

The good news is that there are ways to stay ahead of potential issues and keep your AI systems running smoothly. Regular testing and monitoring can help you identify and address problems before they become costly disasters. Try these practical strategies:

- Establish Clear Evaluation Criteria: Decide what success looks like for your AI tool. Is it generating accurate data, reducing customer complaints, or hitting specific performance metrics? Create benchmarks, define thresholds for acceptable outputs, and track key metrics regularly.

- Run A/B Testing: Don’t just assume AI hits the mark—test it. A/B testing allows you to compare different versions of your AI outputs. For instance, if you’re using AI for email marketing, you can compare conversion rates between human-crafted emails and ones optimized by AI to see which performs better.

- Regularly Audit AI Outputs: Think of audits as quality control. Periodically evaluate whether the AI is still aligned with your business goals, handling nuanced contexts correctly, and producing reliable outcomes. If errors are spotted, dive deeper to uncover the root cause.

- Plan for Retraining: Build cycles for model retraining into your workflow. If your industry or audience is evolving rapidly, consider retraining AI models every 6–12 months to keep them relevant. Use new and diverse data during retraining to ensure it covers emerging scenarios.

- Monitor for Bias Continuously: Beyond initial setup, conduct frequent checks for signs of bias creeping back into the system. Use tools that specialize in bias detection, and adjust inputs, parameters, or datasets as needed.

- Enable Alerts for Anomalies: Automation can streamline monitoring, especially for large-scale systems. Set up alerts that flag unusual or unexpected trends in the AI’s performance, such as a sudden drop in accuracy or a spike in errors.

- Get User Feedback: If your AI interacts directly with customers or employees, their feedback is invaluable. Encourage them to report quirky, inaccurate, or confusing outputs immediately. This real-world input can reveal blind spots you might not notice in testing environments.

- Deploy Version Control Practices: Treat AI configurations and updates like software. Implement version control systems so you can track changes, revert to previous states if needed, and identify what updates caused issues.

By proactively monitoring and testing your AI systems, you can ensure they stay on track, adapt to changes, and consistently deliver value. Think of it like regular maintenance on a machine—without it, even the most powerful tools will eventually break down.

Neglecting Security and Privacy Concerns

In an age where AI tools are integral to many workflows, overlooking security and privacy is a risk you can’t afford. While these tools streamline tasks and drive efficiencies, they also create openings for cyber threats, data misuse, and compliance violations. From unencrypted communication to accidental exposure of sensitive information, the stakes are higher than ever. Let’s explore how to safeguard yourself and your organization.

Understanding AI Security Risks

AI systems handle vast amounts of data, making them attractive targets for breaches. Many vulnerabilities can compromise sensitive information if precautions aren’t taken.

- Data Leaks: AI tools often process confidential or personal data. Without proper data encryption and storage measures, this information can be exposed to unauthorized parties. For example, tools transmitting unencrypted data over public networks are highly vulnerable to interception.

- Unauthorized Access and Model Theft: Many organizations fail to implement strict access controls. Dormant credentials or excessive privileges granted for short-term projects often go dormant but remain exploitable by attackers. Worse, AI models can be reverse-engineered, leading to intellectual property theft or misuse.

- Adversarial Manipulations: Attackers can subtly alter AI inputs to mislead outputs—a phenomenon known as adversarial attacks. Imagine a self-driving car unable to interpret manipulated traffic signs, potentially causing chaos.

- Deepfakes and Disinformation: Cutting-edge generative AI is used to create deepfakes or spread misinformation. This technology lacks natural safeguards, posing risks to reputations, elections, or false claims about products.

- Weak API Security: AI tools often communicate via APIs, which, if unsecured, can expose the system to vulnerabilities that hackers can exploit for unauthorized control.

Understanding these threats is the first step toward implementing preventative measures that keep sensitive data out of harm’s way.

Ensuring Safer AI Usage Practices

Securing your AI workflows doesn’t have to be overwhelming. A few proactive steps can significantly reduce risks while ensuring compliance with privacy regulations like GDPR.

- Choose GDPR-Compliant Tools

Always pick AI systems that meet recognized compliance benchmarks. GDPR-aligned AI tools ensure data protection rights and safeguard personal information. Platforms that are transparent about their data loss prevention measures are a safer bet. - Encrypt Data Across the Board

Sensitive data stored or transmitted by AI tools should always be encrypted. Even if unauthorized access occurs, encryption ensures the data remains useless to attackers. Tools offering end-to-end encryption provide an extra layer of security. - Anonymize Sensitive Information

Before inputting data into an AI tool, strip it of identifiable markers. Anonymization reduces the risk of accidental exposure while enabling the tool to work without violating privacy rules. - Disable Unnecessary Permissions

Audit who has access to your AI systems. Remove inactive or outdated credentials, and ensure users only have permissions necessary for their roles. Keeping privileges lean minimizes the potential attack surface. - Train Teams on Cybersecurity Best Practices

Human error is often the weakest link in security. Train your team to identify suspicious activity, follow data protection protocols, and use secure passwords. Include lessons specific to managing AI tools, such as avoiding sharing sensitive input data. - Use Zero Trust Architecture

Adopt a “never trust, always verify” model. Even internal users and devices should undergo regular authentication. This significantly reduces the chance of attackers moving laterally within your systems if they gain access. - Conduct Regular Audits

Think of audits as health check-ups for your security setup. Regularly review your AI tools’ performance, check for unauthorized access, and update configurations. Tools like AI-breach simulations can test for vulnerabilities, allowing you to adapt before a problem arises. - Monitor for Anomalies in AI Behavior

AI outputs can signal underlying issues. Unusual or erratic behavior might indicate compromised models. Regular monitoring ensures rogue actors can’t exploit your systems undetected.

By taking these precautions, you can continue leveraging AI’s benefits without exposing yourself to unnecessary risks. Remember, it’s easier to prevent vulnerabilities than deal with the fallout of security or privacy breaches.

Conclusion

Avoiding these common mistakes is key to making AI a valuable part of your workflow. By staying mindful of over-reliance, customizing tools to your needs, ensuring data accuracy, and monitoring outputs, you’ll sidestep many of the pitfalls that trip others up.

Remember, AI is a tool—not a replacement for strategy, creativity, or human oversight. Treat it as a partner that enhances your capabilities rather than something that takes over completely.

Start small, test often, and keep refining your approach. With thoughtful use, you can harness AI’s full potential while minimizing risks and frustrations. What steps will you take today to get more from your AI tools? The possibilities are in your hands.

Pingback: How AI Is Transforming Education From The Classroom To Career Paths - Pro AI Tools